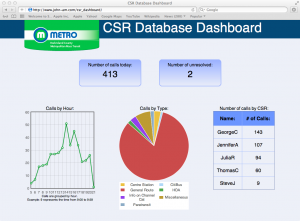

Static demo (w/ dummy data)

Before I begin I want to say that the code here, along with any other code I have posted, is posted with the expressed permission of the company that originally had me write it.

This is something I’ve been anxious to write about, I’m really happy with the results and it helped out quite a bit at MetroLINK. Â It’s a web dashboard to show the call data from Metro’s Customer Service Representatives (CSRs). Â It pulls data from an Access database through ODBC, and uses PHP to generate the main dashboard as well as the drill-down pages. Â The graphs are provided by the excellent Flot javascript library.

Last year it was decided that more information had to be gathered in regards to how many calls the CSRs were taking, and what those calls were about. Â Our phone system didn’t support anything beyond basic logging, so until the system could be upgraded something needed to be put in place that would allow the CSRs to track their own calls. Â I opted for Access because it was a database system others were already familiar with, and I could make an interface easily enough by using VBA and Access’ own forms. Â We saw results almost immediately, and had a much better insight into what the CSRs were doing.

Just using the Access built-in reporting functionality was great, but it was missing the “live” element. Â That’s when I decided to start working on this in my spare time. Â I discussed what we would need on a dashboard with my co-workers, and then set out to make it happen.

I had some hesitation when I was figuring out how to get the data from the Access file to PHP. The same file was being used by the CSRs to input this same data, so I had been worried about the file being locked.  The Access forms were already split to avoid this, but I didn’t know how a connection from PHP would behave.  With ODBC setting up the connection to the Access file was a breeze, and I was pleased to find out it handled multiple connections without issue.  On top of that I could specify that the connection was read-only, providing some security as well.

When I was designing the dashboard I wanted it to have a similar appearance to the public-facing website gogreenmetro.com, so I borrowed the color scheme and title image. While the data was only changing on each refresh (more on that later) I wanted the dashboard to appear to have activity in it.  To get to this goal I included several hover-over effects and made things respond in useful ways where I could.  Primarily in the graphs and tables where you can highlight parts and get specific information about a point or pie piece.  While it isn’t perfect, it gives the dashboard a little more polish and makes it feel more “alive” than the same page without those elements.

After the main dashboard was completed I started working on the drill-down pages. Â They can both be accessed by clicking the numbers for total number of calls and number of unresolved on the main page. Â The unresolved drill-down is just a larger version of the breakdown by CSR, which is just building a table. Â But the number of calls drill-down introduced some challenges.

On the main page I used the hour function to group calls by hour, and sent that to Flot. Â It was simple, and worked for the purposes of the graph. Â Moving on to the more advanced graphs though, that method was no longer going to work. Â I had to use Flot’s time support, which means I needed to get milliseconds from the Unix Epoch, as that’s JavaScript’s native time format. Â None of this was too challenging until timezones entered the picture. Â Using Datediff to give me seconds from epoch gave me a sort of “false UTC” that treats the times as if there was no time zone offset. Â Since the data would always be correct in the actual database and the presentation wasn’t affected, I saw no problems with this. Â It actually encourages it in the Flot API instructions.

Until I checked the tooltips. JavaScript corrects for time zone when you start using date functions, so all my times were coming in a few hours off.  PHP provides a great way to get the local time zone offset in seconds, so I used that to correct the difference by changing it before the page was rendered. A side effect of this is that the times change depending on where the page is viewed, so 3pm Central would be 1pm Pacific and so on. In this context it would probably be a bug, but in other contexts it would be a feature.

In all, this project taught me a lot. It reinforced my knowledge of things like JSON, HTML/CSS, and how to implement designs to work cross-browser. It gave me a chance to use PHP for a project, and I learned about it in the process. Finally, it also gave me a chance to really use Flot and jQuery. Being able to bring all these things together in one consistent project was a great experience.